Website downtime represents one of the most critical challenges facing online businesses today. When your website becomes inaccessible to users, every second counts—not just in lost revenue, but in eroded customer trust, damaged search rankings, and competitive disadvantage. This comprehensive guide provides everything you need to understand, prevent, and respond to website downtime effectively.

What Is Website Downtime?

Website downtime occurs when your website becomes partially or completely inaccessible to visitors. During these periods, users attempting to reach your site encounter error messages, slow loading times, or complete inability to connect. Downtime represents any moment when your website fails to deliver the experience users expect.

The technical definition extends beyond simple unavailability. Downtime includes periods when your website loads so slowly that users abandon it, when specific pages or features malfunction, or when server errors prevent proper content delivery. Each scenario represents a failure in the continuous availability that modern internet users demand.

Understanding downtime requires recognizing that websites operate within complex digital ecosystems. Your site depends on hosting servers, network infrastructure, content delivery networks, databases, third-party services, and countless other components. Failure in any single element can trigger downtime that impacts your entire online presence.

The True Cost of Website Downtime in 2025

Recent research reveals that website downtime costs have reached unprecedented levels. Global 2000 companies lose approximately $400 billion annually to downtime, representing 9% of their total profits. These staggering figures underscore how digital availability directly impacts bottom-line business performance.

The average cost per minute of downtime has escalated to $14,056 for all organizations and $23,750 for large enterprises. This represents a dramatic 150% increase from the widely-cited baseline of $5,600 per minute established in 2014. The surge reflects growing digital dependency where even brief interruptions cascade into massive financial losses.

Industry-specific impacts vary significantly. E-commerce businesses face immediate revenue loss when customers cannot complete transactions. A major retailer experiencing a three-hour outage during peak shopping season lost $2.3 million in immediate sales, but the real damage extended far beyond—customer trust plummeted, leading to sustained increases in cart abandonment and decreased repeat purchases totaling over $8.7 million.

Financial services institutions face particularly severe consequences, with average annual losses reaching $152 million and per-minute costs ranging from $12,000 to potentially $9.3 million per hour for major banks. The sector's regulatory environment compounds these costs, as downtime often triggers compliance violations and regulatory fines.

Healthcare systems confront unique cost structures where patient safety implications outweigh pure financial calculations. Medium hospitals experience costs of $1.7 million per hour for electronic health record outages, while large hospitals face $3.2 million hourly. The average healthcare incident costs $740,357, with the sector suffering $14.7 billion in total costs from ransomware-related downtime in recent years.

Beyond immediate financial losses, downtime inflicts lasting damage through multiple channels. Customer trust erosion proves particularly insidious—research shows that 64% of consumers become less likely to trust a business after experiencing a website crash. Only 11% of users say they would return to a website after encountering it offline just once, highlighting how single incidents can permanently lose customers.

Types of Website Downtime

Planned Downtime

Planned downtime encompasses scheduled periods when websites are deliberately taken offline for maintenance, updates, or system enhancements. Organizations typically schedule these activities during low-traffic periods to minimize user impact. Common reasons include server maintenance, software updates, security patches, hardware upgrades, and infrastructure improvements.

Effective planned downtime management requires advance communication with users, strategic timing during off-peak hours, clearly defined maintenance windows, and contingency plans for unexpected complications. The average enterprise experiences approximately $5.6 million annually in planned downtime costs, making optimization essential despite its scheduled nature.

Best practices for planned downtime include announcing maintenance well in advance through multiple channels, implementing maintenance mode pages that inform users of expected duration, scheduling updates during historically low-traffic periods, and using load balancers or redundant systems to maintain partial functionality where possible.

Unplanned Downtime

Unplanned downtime occurs unexpectedly due to unforeseen incidents—hardware failures, software glitches, cyberattacks, traffic surges, or human errors. This category represents the more dangerous and costly form of downtime because it strikes without warning and often at the worst possible moments.

Statistics reveal that 82% of companies have experienced at least one unplanned downtime incident over the past three years, with most suffering two or more such events. The unpredictable nature of these outages compounds their impact, as organizations lack time to prepare mitigation strategies or communicate with affected users.

Unplanned downtime sources include server failures from hardware malfunctions or capacity limits, network issues disrupting connectivity, database crashes preventing data access, DNS problems making sites unreachable, DDoS attacks overwhelming servers with malicious traffic, security breaches forcing systems offline, software bugs causing critical failures, and third-party service outages affecting dependent systems.

Partial Downtime

Partial downtime represents a particularly insidious form where websites remain technically online but specific pages, features, or functionalities fail. Users can access some content while other critical elements remain unavailable. This scenario often goes undetected longer than complete outages because surface-level monitoring shows the site as operational.

Examples include checkout systems failing while product pages load normally, search functionality becoming unavailable, user authentication systems preventing logins, media files failing to display, forms refusing to submit, and specific geographic regions experiencing access problems while others work fine.

Partial downtime proves especially damaging because users who successfully reach your site then encounter broken functionality, creating frustration that might exceed the impact of finding the site completely offline. The intermittent nature also complicates diagnosis and resolution.

Root Causes of Website Downtime

Server and Infrastructure Issues

Server problems represent the most common downtime cause. Hardware failures occur when physical components malfunction—power supplies fail, hard drives crash, memory modules corrupt, cooling systems breakdown, or network interface cards malfunction. Even well-maintained data centers experience occasional hardware failures that can take entire servers offline.

Server overload happens when traffic exceeds available capacity. Popular content going viral, successful marketing campaigns, seasonal traffic spikes, or coordinated bot attacks can overwhelm server resources. When servers cannot handle the request volume, they slow dramatically or crash completely.

Hosting provider infrastructure issues extend beyond individual server problems. Power outages, cooling system failures, network backbone disruptions, and data center natural disasters can affect hundreds or thousands of websites simultaneously. The quality and reliability of your hosting provider directly influence downtime frequency.

Network and Connectivity Problems

Network issues prevent users from reaching websites even when servers function perfectly. Internet service provider problems, routing errors, DNS server failures, content delivery network issues, and bandwidth limitations can all sever the connection between users and your site.

DNS failures prove particularly problematic because they make websites completely unreachable despite servers operating normally. When DNS servers fail to translate domain names into IP addresses, users cannot establish connections. DNS propagation delays following configuration changes can also create temporary accessibility issues.

Software and Application Errors

Software bugs in website code, content management systems, e-commerce platforms, or custom applications can trigger downtime. A single coding error deployed to production can crash entire websites. Plugin conflicts, incompatible theme updates, and corrupted databases frequently cause WordPress and similar CMS platforms to fail.

Application dependencies create vulnerability chains where problems in one component cascade throughout the system. If your website relies on external APIs, payment gateways, or third-party services, failures in those dependencies can render your site partially or completely non-functional.

Security Attacks and Malicious Activity

Cyberattacks deliberately aim to take websites offline or compromise their functionality. Distributed Denial of Service (DDoS) attacks flood servers with traffic from multiple sources simultaneously, overwhelming capacity and preventing legitimate users from accessing sites. These attacks have become increasingly sophisticated and difficult to mitigate.

Malware infections can corrupt website files, inject malicious code, or compromise server resources. Ransomware attacks encrypt website data and hold it hostage, forcing organizations to choose between paying ransoms or rebuilding from backups. SQL injection attacks, cross-site scripting, and other exploits can compromise website security and cause downtime during remediation.

Human Error

Human mistakes account for a significant portion of unplanned downtime. Mistyped commands in server administration can take sites offline. Incorrect configuration changes, accidental file deletions, database query errors, and failed deployment processes all stem from human error.

Maintenance mistakes represent another common category—engineers performing routine updates accidentally break dependencies, misconfigure settings, or overlook critical compatibility requirements. The complexity of modern web infrastructure increases opportunities for human error at every touchpoint.

Third-Party Dependencies

Modern websites rely extensively on third-party services for functionality, creating external dependencies that introduce downtime risk. Content delivery networks, payment processors, authentication services, API providers, analytics platforms, and advertising networks all represent potential failure points.

When third-party services experience outages, dependent websites suffer consequences despite their own infrastructure functioning properly. The 2017 Amazon S3 cloud outage demonstrated this vulnerability, affecting numerous companies from Apple and Venmo to Slack and Trello, with total financial costs exceeding $150 million.

How Website Downtime Affects SEO Rankings

Search engines prioritize user experience, making website availability a critical ranking factor. When Google's crawler (Googlebot) attempts to access your site during downtime, it receives error codes signaling unavailability. The impact depends significantly on downtime duration and frequency.

Short-Term Downtime Impact

Brief outages lasting a few hours typically do not significantly harm search rankings. Google engineer John Mueller has stated that temporary downtime of less than 24 hours should not trigger major ranking drops. The search engine recognizes that occasional technical issues affect all websites and does not immediately penalize sites for brief unavailability.

However, even short downtime causes ranking fluctuations. Mueller notes that rankings may experience a period of flux lasting one to three weeks following just one day of server downtime. During this period, pages may temporarily drop in position as Google recrawls the site and reassesses its stability.

Extended Downtime Consequences

Longer outages inflict severe SEO damage. If your website returns HTTP 500 error codes repeatedly over several days, Google begins deindexing pages. Research by Moz found that intermittent 500 internal server errors caused tracked keywords to plummet in search results, often dropping completely out of the top 20 positions.

Websites unavailable for more than 24-48 hours risk having pages removed from Google's index entirely. Once deindexed, pages lose all search visibility and traffic. Recovery requires not only fixing technical issues but also resubmitting sitemaps, requesting recrawling, and waiting for Google to restore confidence in your site's reliability.

Mueller estimates it takes roughly a week of downtime before significant drops in indexed pages become noticeable. Since Google recrawls important URLs more frequently, high-value pages drop from the index faster during sustained outages. Less critical pages may remain indexed longer but eventually disappear if downtime persists.

Crawl Frequency Reduction

Repeated downtime incidents train Google to crawl your site less frequently. When Googlebot repeatedly encounters unavailable pages, it reduces crawl frequency to avoid wasting resources on unreliable sites. This reduced attention means updates and new content take longer to appear in search results, even after resolving downtime issues.

Studies show that affected pages eventually receive fewer crawls per day, suggesting Googlebot visits pages less frequently the more often it encounters server errors. The damage compounds exponentially—longer downtime leads to less frequent crawling, which delays recovery and extends the period of reduced visibility.

Recovery Timeline

Recovery from downtime-related ranking drops follows predictable patterns. Short outages (under 24 hours) typically see rankings normalize within one to three weeks. Important pages return to the index faster as Google recrawls them more frequently. As pages return, they usually resume previous ranking positions, though it may take time for all signals to reassociate.

Recovering from extended outages proves more challenging. If your site was down for multiple days or weeks, recovery can take months. You must first resolve technical issues, then resubmit sitemaps, request recrawling through Google Search Console, and demonstrate consistent uptime before Google restores full crawl frequency and ranking positions.

Bounce Rate and User Experience Impact

Beyond direct crawling concerns, downtime damages ranking indirectly through user behavior signals. When users encounter unavailable pages, they immediately return to search results (bouncing), signaling poor user experience to Google. High bounce rates correlate with ranking declines across most search algorithms.

Google's algorithm evaluates overall user experience through multiple ranking factors—page speed, mobile-friendliness, and bounce rate. Frequent downtime indicates poor user experience, leading Google to demote sites in favor of more reliable competitors. The search engine's obsessive focus on providing excellent user experiences means consistently unavailable sites inevitably lose rankings.

Best Practices for SEO During Downtime

Minimize SEO damage during necessary maintenance by returning HTTP 503 "Service Unavailable" status codes rather than 404 or 500 errors. The 503 code signals temporary unavailability, instructing search engines to check back later rather than deindexing pages.

Create informative maintenance mode pages that explain the situation, provide estimated resolution times, and maintain your site's branding. While these pages won't prevent all ranking fluctuations, they demonstrate professional handling of necessary downtime.

Monitor Search Console for crawl errors and indexing issues immediately following downtime. Request recrawling of important pages once your site returns to normal operation. Track ranking positions for critical keywords to identify and address persistent drops that may indicate deeper issues beyond temporary unavailability.

Measuring and Monitoring Downtime

Key Uptime Metrics

Website uptime percentage represents the primary metric for measuring availability. It calculates the proportion of time your site remains accessible and functional. Common uptime targets include 99.9% (allowing 43.8 minutes monthly downtime), 99.95% (21.9 minutes monthly), 99.99% (4.38 minutes monthly), and 99.999% (26.3 seconds monthly).

Understanding these percentages reveals the challenging reality of high availability. Even "five nines" (99.999%) uptime permits over 26 seconds of monthly downtime. Achieving and maintaining such reliability requires robust infrastructure, redundant systems, and proactive monitoring.

Response time measures how quickly your server responds to requests. While not strictly a downtime metric, degraded response times indicate performance issues that may precede outages. Monitoring response times from multiple global locations provides insight into regional performance variations.

Mean Time To Detect (MTTD) quantifies how quickly you identify downtime after it occurs. Faster detection enables quicker response, minimizing impact duration. Organizations with effective monitoring typically detect issues within seconds or minutes, while those relying on user reports may remain unaware of problems for hours.

Mean Time To Resolve (MTTR) measures average time from problem detection to full resolution. This metric reflects both technical capabilities and process effectiveness. Reducing MTTR requires efficient incident response procedures, skilled technical teams, and appropriate tools and resources.

Uptime Monitoring Best Practices

Implement comprehensive monitoring that checks availability from multiple global locations. Regional outages may affect users in specific geographic areas while leaving other regions functional. Multi-location monitoring eliminates false positives and identifies localized issues.

Configure frequent monitoring intervals—checking every one to five minutes provides near-real-time awareness of availability issues. More frequent monitoring (every 30-60 seconds) offers faster detection but generates more data and potentially more alerts. Balance frequency against alert fatigue and operational needs.

Monitor multiple aspects of website functionality beyond simple connectivity. Check that specific pages load correctly, forms submit properly, databases respond appropriately, APIs return expected results, and critical user journeys complete successfully. Comprehensive monitoring catches partial downtime that simple ping tests miss.

Establish clear alerting protocols that notify appropriate team members immediately when issues occur. Configure multiple alert channels—email, SMS, phone calls, Slack, PagerDuty—ensuring critical notifications reach responsible parties regardless of circumstance. Implement escalation procedures for unacknowledged alerts.

Essential Monitoring Components

HTTP/HTTPS monitoring verifies that web servers respond to requests with correct status codes. These checks simulate user browsers requesting pages and validate that servers return content successfully. Monitor both GET and POST requests to ensure all functionality works properly.

Port monitoring checks that specific network ports remain open and responsive. Different services use different ports—HTTP uses port 80, HTTPS uses 443, FTP uses 21, and email services use various ports. Monitoring ensures all required services remain available.

SSL certificate monitoring prevents embarrassing and damaging certificate expiration. Expired SSL certificates trigger browser security warnings that prevent users from accessing sites. Monitoring certificate validity with advance warnings allows renewal before expiration.

DNS monitoring verifies that domain name resolution functions correctly. DNS issues prevent users from reaching sites despite servers operating normally. Regular DNS checks from multiple locations ensure resolution works globally.

Content verification monitoring checks that pages contain expected content. Server might respond successfully while serving error messages or incorrect content. Keyword monitoring ensures pages display properly rather than showing fallback error content.

Synthetic monitoring simulates real user interactions through scripted tests. These tests can navigate multi-step processes—logging in, adding items to carts, completing checkout—verifying that complex user journeys function end-to-end. Synthetic monitoring catches functionality failures that simple availability checks miss.

Real User Monitoring (RUM) tracks actual user experiences, measuring load times, errors, and performance from real devices and locations. Unlike synthetic monitoring that tests from predetermined locations, RUM provides insight into how real users experience your site across diverse conditions.

Preventing Website Downtime

Infrastructure Optimization

Choose reliable hosting providers with proven uptime records. Research potential hosts thoroughly—examine their historical uptime data, read customer reviews, verify their service level agreements, and understand their infrastructure redundancy. Reputable providers typically guarantee 99.9% or higher uptime.

Implement redundant systems that eliminate single points of failure. Load balancers distribute traffic across multiple servers, ensuring that if one fails, others continue serving requests. Redundant power supplies, network connections, and storage systems further protect against hardware failures.

Utilize Content Delivery Networks (CDNs) to distribute content globally and reduce server load. CDNs cache static content on servers worldwide, serving users from nearby locations for faster load times and reduced origin server demands. CDNs also provide DDoS protection and absorb traffic spikes.

Scale infrastructure appropriately for traffic demands. Regular capacity planning ensures servers handle peak loads comfortably. Cloud hosting platforms offer auto-scaling that automatically adds resources during traffic spikes, preventing overload-induced downtime.

Security Hardening

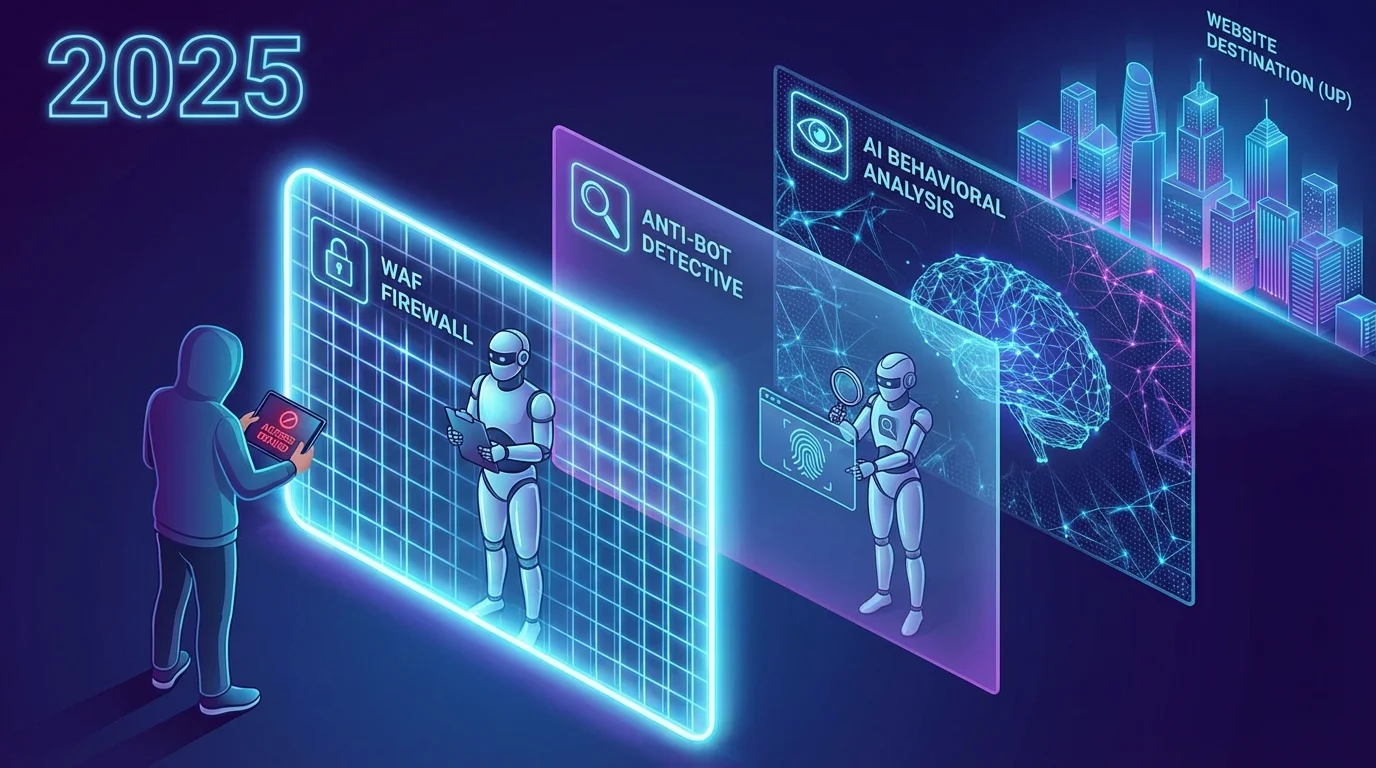

Implement robust DDoS protection through specialized services that filter malicious traffic before it reaches your servers. Modern DDoS attacks can generate hundreds of gigabits per second of traffic, overwhelming even large infrastructures without proper mitigation.

Maintain strong security practices including regular security audits, vulnerability assessments, penetration testing, and prompt application of security patches. Keep all software—operating systems, web servers, applications, plugins—updated with latest security fixes.

Use Web Application Firewalls (WAF) to filter malicious requests and protect against common attacks like SQL injection, cross-site scripting, and other exploits. WAFs analyze incoming traffic patterns and block suspicious requests before they reach your application.

Implement rate limiting to prevent abuse and protect against automated attacks. Rate limiting restricts the number of requests from individual IP addresses or users within specific timeframes, preventing both malicious attacks and unintentional overload from misbehaving clients.

Proactive Maintenance

Establish regular maintenance schedules for updates, patches, and infrastructure improvements. Scheduled maintenance prevents larger problems that could cause unplanned downtime. Communicate maintenance windows to users and schedule them during low-traffic periods.

Test all changes in staging environments before deploying to production. Staging environments that mirror production configurations allow teams to identify problems before they affect users. Never deploy untested code or configuration changes directly to live systems.

Maintain comprehensive backups of all critical data, configurations, and systems. Automated daily backups ensure you can restore functionality quickly following failures. Store backups in geographically separate locations to protect against regional disasters. Test backup restoration regularly—untested backups are worthless.

Monitor server health continuously—track CPU usage, memory consumption, disk space, network bandwidth, and application performance. Proactive monitoring identifies developing problems before they cause outages. Set threshold alerts that notify teams when metrics approach concerning levels.

Code Quality and Development Practices

Implement rigorous code review processes that catch bugs before deployment. Peer reviews, automated testing, and continuous integration practices reduce the likelihood of introducing errors into production systems.

Use automated testing extensively—unit tests verify individual components, integration tests validate that components work together correctly, and end-to-end tests confirm complete user workflows function properly. Comprehensive test suites catch regressions and prevent known issues from recurring.

Deploy code gradually using techniques like canary deployments and blue-green deployments. These strategies release changes to small user percentages initially, allowing teams to identify problems with limited impact before rolling out changes to all users.

Maintain detailed change logs and version control. When problems occur, teams need quick access to recent changes to identify potential causes. Version control systems enable rapid rollback to previous stable versions if deployed changes cause issues.

Dependency Management

Minimize reliance on external services where possible. Each third-party dependency represents a potential failure point beyond your control. Evaluate whether external services provide sufficient value to justify the availability risk they introduce.

Implement fallback mechanisms for critical third-party services. If payment processing APIs fail, provide alternative payment methods. If analytics services go offline, queue analytics data locally until services recover. Graceful degradation maintains core functionality despite external failures.

Monitor third-party service status through their public status pages and API health endpoints. Many providers offer webhooks or status check endpoints that alert you to their service issues. Proactive awareness enables faster response to dependency failures.

Responding to Downtime Incidents

Immediate Response Actions

When downtime occurs, time becomes critical. Every second of delay extends user impact and increases costs. Establish clear incident response procedures that team members can execute immediately without confusion or deliberation.

First, verify the problem scope. Determine whether the issue affects the entire site or specific components, which geographic regions experience problems, and whether the issue originates internally or from external dependencies. Quick assessment enables targeted response rather than unfocused troubleshooting.

Communicate immediately with stakeholders. Notify users through social media, email, status pages, and any other relevant channels that you're aware of the problem and working on resolution. Transparency maintains trust even during failures—users appreciate knowing that problems are being addressed.

Activate your incident response team with clearly defined roles. Designate an incident commander to coordinate response, technical specialists to diagnose and resolve issues, and communication managers to keep stakeholders informed. Structured roles prevent duplicate effort and ensure all necessary tasks receive attention.

Diagnosis and Resolution

Systematic troubleshooting accelerates problem resolution. Check obvious issues first—server status, network connectivity, recent configuration changes, traffic patterns. Many problems have simple causes that quick investigation reveals.

Review logs comprehensively. Server logs, application logs, error logs, and access logs contain valuable diagnostic information. Look for error patterns, unusual activity, and correlation between events and downtime onset.

Isolate the problem by testing individual components. If specific pages fail while others work, investigate those pages. If particular servers report errors while others function normally, examine those servers. Methodical isolation identifies root causes faster than broad, unfocused investigation.

Engage appropriate expertise quickly. Complex problems may require specialized knowledge—database administrators for database issues, network engineers for connectivity problems, security specialists for attack mitigation. Don't hesitate to escalate problems beyond initial responders when necessary.

Post-Incident Analysis

After resolving downtime, conduct thorough post-incident reviews. Analyze what happened, why it happened, how quickly the team responded, and what could prevent similar incidents in the future. Blameless postmortems focus on system improvements rather than individual fault.

Document incidents comprehensively including timeline of events, actions taken, decisions made, and lessons learned. This documentation serves as organizational memory, preventing repeated mistakes and accelerating response to similar future incidents.

Implement preventive measures identified during post-incident analysis. If downtime resulted from insufficient capacity, scale infrastructure. If monitoring gaps delayed detection, enhance monitoring coverage. Turn every incident into an opportunity for improvement.

Building Downtime Resilience

Designing for Failure

Accept that failures will occur and design systems accordingly. Rather than attempting to prevent all possible failures—an impossible goal—build systems that tolerate failures gracefully and recover quickly.

Implement redundancy at every layer. Multiple web servers behind load balancers ensure that individual server failures don't take sites offline. Database replication provides failover capability when primary databases fail. Geographic distribution protects against regional outages.

Design for graceful degradation where systems maintain core functionality despite component failures. If recommendation engines fail, websites should still allow browsing and purchasing. If analytics services go offline, core business processes should continue unaffected.

Disaster Recovery Planning

Develop comprehensive disaster recovery plans that address various failure scenarios—complete data center outages, natural disasters, cyberattacks, data corruption. Each scenario requires specific response procedures and recovery strategies.

Document recovery procedures clearly so any team member can execute them under pressure. Include step-by-step instructions, required tools and credentials, contact information for critical personnel and vendors, and expected recovery timelines.

Test disaster recovery plans regularly through scheduled drills and simulations. Testing reveals gaps in procedures, identifies missing resources, and builds team confidence in executing recovery under stress. Update plans based on test findings.

Establish Recovery Time Objectives (RTO) and Recovery Point Objectives (RPO) for different services. RTO specifies maximum acceptable downtime for each service, while RPO defines maximum acceptable data loss. These objectives guide technology investments and process development.

Creating a Culture of Reliability

Foster organizational commitment to reliability that extends beyond technical teams. Business leaders must understand downtime impacts and support investments in prevention and response capabilities.

Incentivize reliability through metrics, goals, and recognition. Track and report uptime metrics prominently. Celebrate teams that achieve high availability. Make reliability a first-class concern alongside feature development and other priorities.

Provide ongoing training for technical teams on best practices, new technologies, and lessons from past incidents. Well-trained teams respond more effectively and make better preventive decisions.

Communicating During Downtime

Status Page Best Practices

Maintain public status pages that provide real-time information about service availability. Status pages should display current system status, details about ongoing incidents, estimated resolution times, and historical uptime data.

Update status pages immediately when issues occur and throughout incident response. Users checking status pages during downtime seek reassurance that problems are acknowledged and being addressed. Frequent updates demonstrate active response even if resolution takes time.

Allow users to subscribe to status page notifications via email, SMS, or RSS feeds. Proactive notification prevents repeated manual checking and demonstrates commitment to transparency.

Social Media Communication

Use social media platforms—particularly Twitter—for real-time downtime communication. Many users turn to social media first when experiencing access problems. Prompt acknowledgment and updates through these channels reach users where they already engage.

Respond to user reports and questions on social media quickly and professionally. Every response demonstrates attentiveness and builds confidence that your team takes availability seriously.

Email Notifications

Send email notifications to affected users when significant outages occur, particularly if downtime lasts more than a few minutes. Emails should acknowledge the problem, explain what happened, outline resolution steps, and provide estimated restoration times.

Follow up with post-incident emails that explain root causes, preventive measures being implemented, and any compensation or remediation being offered. Transparency about problems and solutions maintains trust despite failures.

The Future of Website Uptime

Emerging Technologies

Artificial intelligence and machine learning increasingly enable predictive maintenance that identifies potential failures before they cause downtime. AI systems analyze historical patterns, current metrics, and environmental factors to forecast problems and recommend preventive actions.

Edge computing distributes processing closer to users, reducing reliance on centralized infrastructure and improving resilience. Edge deployments ensure that regional issues affect only nearby users rather than causing global outages.

Blockchain and distributed ledger technologies offer new approaches to ensuring data integrity and system reliability. Decentralized architectures eliminate single points of failure inherent in traditional centralized systems.

Evolving Standards

Industry standards for availability continue rising as digital dependence increases. What seemed acceptable a decade ago—99.9% uptime allowing over 43 minutes monthly downtime—now falls short of user expectations. Modern services target 99.99% or higher, permitting only minutes of monthly downtime.

Service Level Agreements (SLAs) increasingly include not just availability percentages but also performance guarantees, response time commitments, and incident response timelines. Providers differentiate through comprehensive reliability commitments beyond simple uptime metrics.

Regulatory Considerations

Governments worldwide increasingly regulate digital service availability, particularly for critical infrastructure and financial services. Regulations mandate minimum uptime standards, incident reporting requirements, and penalties for excessive downtime.

Organizations must stay informed about regulatory obligations affecting their industries and ensure compliance with evolving standards. Failure to meet regulatory requirements can result in fines, sanctions, and reputational damage beyond downtime costs themselves.

Conclusion: The Imperative of Availability

Website downtime represents far more than technical inconvenience—it threatens business viability in our digitally-dependent economy. Every minute offline costs money, erodes customer trust, damages search rankings, and provides competitors with opportunities to capture your audience.

Understanding downtime comprehensively—its types, causes, impacts, and prevention strategies—empowers organizations to maintain the reliability modern users demand. No website achieves perfect uptime, but proper preparation, monitoring, and response minimize downtime frequency and duration.

The investments required for high availability—robust infrastructure, comprehensive monitoring, skilled teams, efficient processes—pale compared to downtime costs. Organizations that prioritize availability gain competitive advantage through superior reliability that builds customer trust and supports business growth.

As digital transformation accelerates and online presence becomes increasingly critical across all industries, downtime tolerance continues declining. The future belongs to organizations that recognize availability as fundamental to success and commit resources accordingly.

Start by understanding your current uptime, implementing comprehensive monitoring, developing clear response procedures, and continuously improving based on incidents and near-misses. Every step toward greater reliability protects your business, serves your customers better, and positions you for success in an increasingly digital world.

Check Your Website Status Now

Don't wait for downtime to impact your business. Use our free website status checker at IsYourWebsiteDownRightNow.com to instantly verify your site's availability from multiple global locations. Our tool checks server status, response times, and SSL certificates, helping you maintain the uptime your users expect and your business demands.